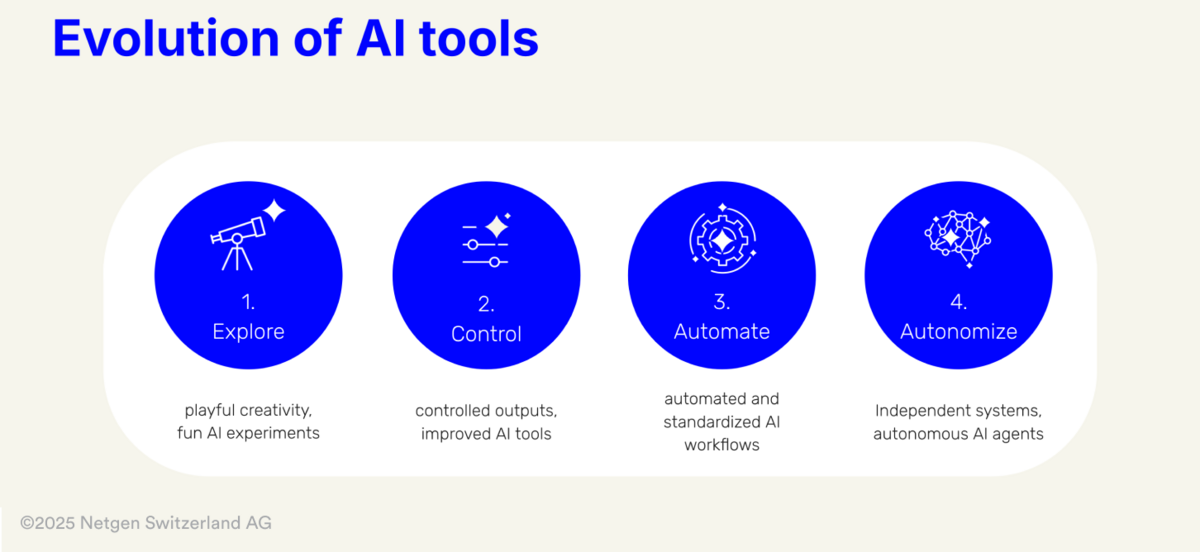

Generative AI tools have evolved into a dynamic field. This evolution of AI tools can be segmented into four defined phases: "Explore, Control, Automate, Autonomize". Each of the four phases represents a significant shift in the interaction between creatives and technology, enabling increasingly precise and efficient results.

This classification of the evolution of design tools, first outlined in my previous blog post "AI & Creativity 4: Use Cases, Ideas, and Concepts at Netgen", provides a valuable framework for understanding the technological evolution within the creative domain.

In the "Explore" phase, experimental approaches dominated, allowing creative possibilities to unfold playfully and with open-ended results – from "Cats in van Gogh's style" to humorous scenarios like "Royals at a Party."

The "Control" phase, on the other hand, focuses on precise adjustments and targeted steering. It marks a major breakthrough as creatives can use state-of-the-art tools and interfaces to efficiently control and adapt their outputs, giving their ideas specific shape and expression.

This article focuses on the "Control" phase, exploring how it revolutionizes creative practice. We examine technologies and workflows across image, video, sound, and 3D categories that enhance efficiency while promoting artistic freedom.

|

Index |

1. The Importance of the "Control" Phase in Creative Processes

The "Control" phase bridges the gap between creative freedom and precise control. Creatives gain access to tools that:

- Precisely control outputs: through parameters like style, structure, and details.

- Ensure consistency: particularly in projects requiring multiple elements or repetitions.

- Save time and resources: by minimizing manual post-processing.

«As AI is becoming a commodity, the seamless integration of functionality in UI and UX will be crucial for the providers to stand out from the flood of tools.»

–

New Tools and Their Role in the "Control" Phase

Today’s generation of AI tools offers intuitive interfaces and workflows that make control not only possible but user-friendly. Tools like MidJourney’s Moodboards, ControlNet, Motion Brushes, or Krea.AI’s Image Editor exemplify this remarkable shift.

Enough theory – let’s explore concrete applications of these tools across the media categories of image, video, and sound:

2. Image: Precise Control in Visual Design

Style Transfer

One of the central aspect of image editing in the "Control" phase is style transfer. This allows specific styles, color palettes, or textures to be precisely applied to new content. It not only simplifies the realization of creative visions but also enables reinterpretations and stylistic expansions of existing works.

Whether for branding, concept art, or other visual media – style transfer optimizes and personalizes creative output. Compared to traditional methods, it transfers styles quickly and accurately, making it especially valuable for large-scale projects. Let’s take a look at some of the best tools.

Moodboards

MidJourney has introduced a game-changing feature with its personalization options and moodboards:

- How it works: Users create moodboards by uploading a selection of images. The AI generates a referring "style code" that can be used to generate outputs based on the uploaded images' styles.

- Applications: Branding, concept art, fashion design, extension of imagery.

- Advantage: Consistency and individuality through visual control.

Here is an example from Rory Flynn:

Mixing Consoles

With tools like Leonardo.AI’s Realtime Gen you can use simple sliders to control the impact of different parameters and get live feedback to your output:

- How it works: allowing users to adjust sliders for specific parameters, such as color tones, texture depth, or stylistic intensity, even expressions. These adjustments are instantly applied to the output, providing real-time feedback and enabling iterative refinement of the design.

- Applications: adjust parameters of style, backgrounds or character to create variations of your idea with ease

- Advantage: direct feedback, easy intuitive handling

An impressive example posted by Billy Boman:

Image Composition

Mapping

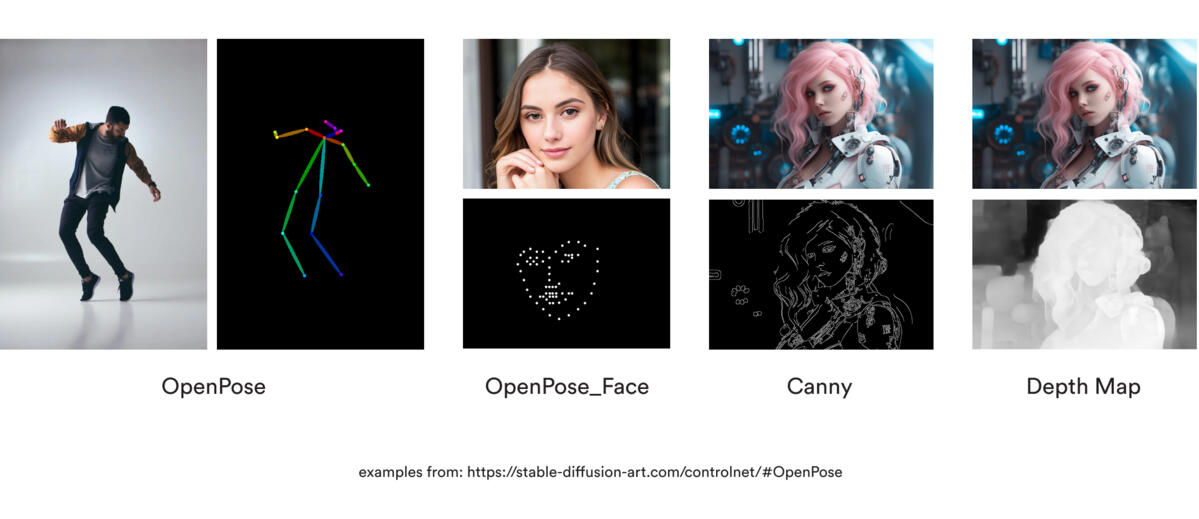

ControlNet revolutionizes generative AI by allowing users to guide outputs with precision using control parameters like depth maps, edge maps, or sketches.

- How it works: ControlNet overlays external input data, such as human poses, object boundaries, or depth maps, onto Stable Diffusion models to produce highly accurate outputs. For example, an architect can use a "depth map" to create consistent structural designs, or a storyboard artist can use "edge maps" to refine animations. Learn more in this detailed guide on ControlNet.

- Applications: Widely adopted in architectural visualization, character design, and video production, ControlNet bridges the gap between creative ideation and practical implementation.

- Advantage: It enables creators to maintain full control over artistic elements, ensuring outputs align precisely with project requirements while minimizing manual adjustments.

Real-Time Drawing

KREA.ai introduces a groundbreaking real-time drawing functionality that empowers users to create and refine visuals interactively.

- How it works: Users draw on a digital canvas while AI refines sketches in real-time. Tools like adjustable brush sizes, erasers, and undo/redo ensure smooth workflows.

- Applications: Ideal for concept creation and storyboarding, this feature simplifies turning ideas into polished outputs with real-time adjustments.

- Advantage: Dynamic workflows are supported through seamless integration of AI-driven refinements, boosting creativity while saving time.

Learn more about this functionality in KREA.ai's Real-Time Drawing Guide.

Image Editors

Krea.AI’s Image Editor for example allows for real-time editing and upscaling:

- How it works: Combines text prompts with interactive tools on a canvas.

- Applications: Pattern design, product visualization, UI/UX prototyping.

- Advantage: Flexible real-time adjustments of specific areas.

An interface approach, combining for example mixing consoles with the canvas idea, can be found on Vizcom's Mix feature

3. Video: Controlled Creativity in Motion

Video is one of the most dynamic media formats, offering entirely new opportunities for creatives in the "Control" phase. From animation to editing complex sequences or extending them, modern tools provide precise control options that enhance both efficiency and artistic quality.

Video generation has made an incredible leap forward, with all major providers accelerating their development in the last quarter of 2024 – Hailulo AI, LumaLabs, Minimax, or Runway or Kling AI.

Do you remember "Will Smith" eating spaghetti in 2023, check it out now: 2025.

OpenAI's long-awaited SORA was finally released, only to be immediately dethroned by Google's Veo 2, which triumphed with its "physical understanding" in animations – have a look how it considers the meat slices in comparison (by Andreas Horn) or the lights and shadows (by Lazlo Gaal), impressive, isn’t it.

Motion Brushes

Motion Brush is a standout tool for creating animations, giving users precise control over movement through motion paths on an interactive canvas. It’s particularly versatile in fields like animation, visual effects, and explainer videos.

A notable example is Kling.AI’s Motion Brush, which offers detailed movement control. Learn more in this article.

Another example is the Motion Brush available in Runway. More details can be found at the Runway Gen-2 Motion Brush Academy.

Video-to-Video

Style transfer for video enables existing videos to be analyzed and their styles – such as color schemes, textures, or visual effects – to be applied to new sequences. This technology is widely used in film post-production and visual effects to ensure consistent styling across series productions. A key advantage is achieving consistency across multiple clips while allowing room for creative reinterpretation.

Also a huge benefits if "low-key" input videos can be transformed via style references (text or image or video prompts) into the wanted look, as this example from MoveAI shows:

Keyframes

Keyframes provide fine-grained control over animations by allowing start, middle and end frames to be uploaded as images, defining precise movements and stylistic transitions between sequences. Here's an example from Rory Flynn created with Runway:

A prime example is the Luma Keyframes Tool, which supports seamless motion and real-time previews. Learn more in the Luma Keyframes Documentation.

OpenAI's SORA takes a leap forward with its 'storyboard' feature. It offers advanced control over AI-generated sequences, allowing users to outline and guide content generation with unmatched precision and narrative coherence.

In December 2024, Runway unveiled an innovative approach to editing image and video generations through a graph-structured mapping of 'latent space.' This method allows for non-linear exploration of creative outputs, offering unprecedented flexibility and precision in navigating and refining AI-generated content. Learn more about this breakthrough at Runway's Creativity as Search.

4. Sound: Controlling Acoustic Outputs

The ability to control acoustic outputs is a game-changer for producing high-quality audio content. AI-powered tools allow for fast and precise optimization of soundtracks, from noise removal to fine-tuning intonation and volume.

A notable development in this field is Adobe’s Project Super Sonic, which is based on three innovative features:

- Text-to-audio generation: Users can create realistic sound effects through text prompts, e.g., “a door creaking open.”

- Object recognition-based sound generation: The AI analyzes video frames, identifies objects, and generates corresponding sound effects, such as a toaster producing the sound of toast popping up.

- Voice-controlled sound generation: Users can mimic desired sounds, and the AI converts these into professional-quality audio effects.

These features enable more precise and faster audio track creation, opening new creative possibilities.

AI-Assisted Audio Design

Tools for improving and adjusting audio tracks:

- How it works: Editing intonation, volume, and noise reduction using AI.

- Applications: Podcasts, voiceovers, film dialogues.

- Advantage: Saves time through automated optimization.

Adobe Podcast is a powerful tool for audio enhancement. With AI-driven features like noise reduction and voice enhancement, it simplifies audio editing for both beginners and pros. Ideal for podcasters and audio editors, it efficiently produces high-quality results while saving time.

ElevenLabs: Advanced Sound Effects

ElevenLabs has revolutionized audio content creation with its cutting-edge sound effects generation tool. This platform allows users to input descriptions or select from a library of audio prompts to produce precise and high-quality sound effects. This tool simplifies the sound design process by automating complex audio tasks, saving time while maintaining exceptional quality. Learn more at ElevenLabs Sound Effects.

Suno: Audio Inputs – Make a Song from Any Sound

Suno introduces an innovative way to create music using any sound as input. This tool allows users to transform everyday sounds into musical compositions through advanced AI algorithms.

- How it works: Users provide an audio input, such as a voice snippet or ambient sound, which the AI then processes and converts into a musical arrangement.

- Applications: Ideal for music production, sound design, and experimental audio projects.

- Advantage: Suno’s tool bridges the gap between raw sound and creative output, enabling users to generate unique music effortlessly. Learn more at Suno’s Audio Inputs.

5. Adding Dimensions – 3D

AI is revolutionizing 3D element generation, offering unparalleled precision and fostering innovative designs. By leveraging AI-driven tools, creators can efficiently refine concepts or construct intricate models with remarkable accuracy. This transformative integration not only streamlines workflows but also unlocks new creative horizons, empowering designers to push boundaries and achieve results previously thought unattainable. The synergy between AI and 3D workflows is setting new benchmarks for efficiency and creativity in the field.

Generative 3D with Vizcom

Vizcom recently unveiled an innovative tool for generating 3D content, potentially transforming the creative process. The tool uses AI to create realistic 3D models from simple sketches or concepts, seamlessly integrating generative AI into workflows:

- How it works: Users can upload 2D sketches or drafts, and the AI automatically generates detailed 3D models. The tool also offers advanced options like perspective adjustment, material simulation, and texture management to refine the output. These features ensure that models are both visually appealing and technically functional.-

- Applications: Product design, architecture, visual effects, and prototyping.

- Advantage: Accelerates the design process and provides an intuitive way to transform ideas into actionable models. Learn more at Vizcom's 2D to 3D Feature.

Text-to-3D with Stable Point-Efficient Architecture

Generating 3D objects from text descriptions has reached new heights with Stability AI’s innovative Stable Point-Efficient Architecture.

- How it works: This technology uses AI to map textual descriptions into accurate 3D models. It optimizes resource usage while maintaining model precision and fidelity, making it suitable for various applications.

- Applications: Its versatility shines in AR/VR experiences, architectural renderings, and game development, where high-quality 3D assets are essential.

- Advantage: By reducing computational overhead, this approach enables faster prototyping without compromising detail or accuracy. Learn more about this advancement in Stability AI’s official announcement.

Trellis: Transforming 3D Asset Creation

Microsoft’s Trellis introduces a groundbreaking approach to creating 3D assets by leveraging AI to streamline and optimize the entire workflow.

- How it works: Trellis allows users to input sketches or raw data, which the AI refines into high-quality 3D models. Its algorithms ensure structural integrity and design precision, making it ideal for complex modeling tasks.

- Applications: Widely used in game development, virtual reality environments, and industrial design, Trellis accelerates asset creation while maintaining exceptional quality.

- Advantage: By reducing the time and effort required for manual adjustments, Trellis empowers creators to focus on innovative and creative aspects of their projects. Learn more at Microsoft Trellis.

here an example from Will Eastcott:

Krea.AI: Image to 3D Objects

One last example, that shows how quickly the boundaries between 2D and 3D are being blurred by AI. is the Krea.AI feature, which converts and integrates images into 3D objects in real time:

6. Conclusion: The "Control" Phase as a New Standard for Creative Work

The "Control" phase represents a turning point in the evolution of generative AI. Tools like MidJourney, ControlNet, Motion Brush, and KREA.ai offer a new level of creative control, enhancing productivity and expanding artistic freedom.

For creatives, this signifies a future with precise tools to bring their visions to life – whether in image, video, sound, or 3D. However, ultimately, these tools are just that – tools. Standing out from the crowd increasingly depends on having the right idea to give these technologies a unique expression.

«These developments mark not an end but the beginning of a new era of creative work, where ideas make the difference.»

–

What experiences have you had with these tools? We look forward to your comments and suggestions!

Epilogue

The next phases, "Automate" and "Autonomize," promise even more exciting developments. In the "Automate" phase, AI will focus on automating repetitive tasks, making creative processes even more efficient. "Autonomize" aims to enable AI to make fully independent creative decisions.

Both phases will be explored in upcoming blog posts. They will be crucial for creatives and companies alike in 2025, driving efficiency and unlocking innovative potential. Stay tuned to discover how these phases will shape the future of creative work!