There is no need for designers to be afraid of AI. However, they should be aware of potential impact AI may have on their profession and be prepared to adapt their skills accordingly.

- Allowing for more collaboration between humans and machines, with each being able to contribute their own unique strengths to the creative process

- Enabling more rapid ideation and prototyping, as well as more efficient testing and feedback loops

- Helping to identify patterns and trends that may otherwise be missed, and suggesting new ideas or approaches.

- Ultimately, AI has the potential to greatly enhance the creativity of individuals and teams, leading to more innovative and impactful work

Well, you may agree or disagree with the title of this blog post, the opening statement and its derived argumentation, or even with the picked cover image above – However, You should be aware that none of it was said or made by a human being. It was done with accessible online AI tools (Playground, DALL-E 2, and Outpainting from OpenAI) using text-to-text and image-to-image prompts – But more on this in detail later.

Prologue

You can spin it any way you want, AI is on the rise, and it's here to stay. It will significantly transform the way we work. Whether you work in banking, technology, healthcare, medicine, insurance, or especially the creative industries.

Do not get me wrong. I love my design job, trained as a visual brand designer, a hobby illustrator, and a “think outside the box” input giver on occasion, and now UX designer; I do believe creativity (still) plays into the hands of creative human minds. Yet, I am totally convinced that AI will play an essential role in every single phase of your creative process. And this is very soon, as, in the last half year, the frequency of new applications/tools is accelerating almost monthly.

Wherever you find yourself on your creative journey: if you do research, if you want to generate ideas, explore concept variations, test your visualized UI prototypes, or analyze the data reports – AI will be part of your software and applications.

Regardless of whether you:

- Want to write (Playground) or translate (Deepl), or correct (Grammarly) catchy headlines or longer texts for your product presentation...

- Visualize graphics (DreamStudio, DALL-E 2, DiffusionBee) or modify them (Outpainting) or color them (Palette), to create unique visual imagery for your brand, independent from stock materials ...

- Generate videos (Imagen Video, Make-A-Video/MetaAI) to add some storytelling to your sales pitch...

- Compose sounds (Harmony.AI), and voices (Sonantic) for a user interface for a device...

- Create multilingual Avatars (Synthesia) for your onboarding training sessions for the international team...

... AI will support and guide you and will, if seamlessly integrated into the workflow, speed up your (creative) work output.

First thing's first

One of the good things is to understand and use AI tools; you do not need to understand or write code ( I can’t code myself). But being curious by nature, let's have a closer look to at least get a glimpse of what came up the last one to one and a half years and how it works:

A brief history

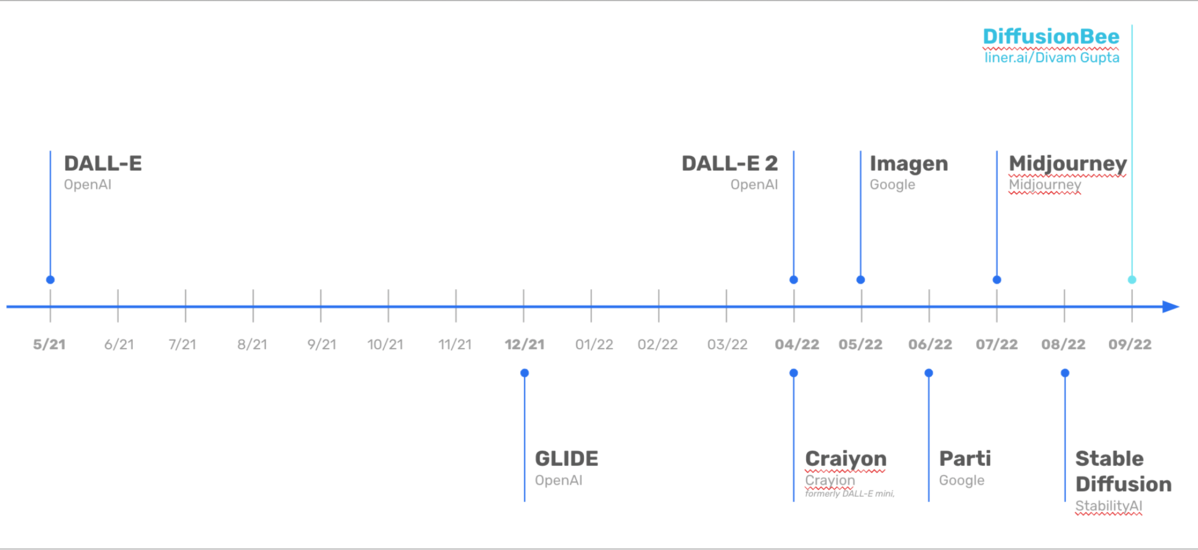

As mentioned before, new AI online platforms and applications for desk and mobile seem to shoot out like mushrooms; here are some well known:

Adapted from Source: Zühlke 2022 / What can I use AI for? / Thomas Dikk, Gabriel Krummenacher, Silvian Melchior / Digital Festival Zürich 2022 / 19. September 2022

How it works ...

... in developer's details and algorithms – no clue. But in simple words, let’s give it a shot and try to understand the so-called diffusion models; platforms like OpenAI, Stability.AI, Midjourney, Imagen base their applications on creating, for example, images out from text prompts.

In this text-to-image case, AI tries to reverse a process to its “original state.” After AI learns to recognize images, it adds noise (equal to losing information) to reverse this again. AI uses neural networks / convolutional networks (CNN) to remove the noise, bringing back the lost information step by step and re-create an image.

Adapted from Source: Zühlke 2022 / What can I use AI for? / Thomas Dikk, Gabriel Krummenacher, Silvian Melchior / Digital Festival Zürich 2022 / 19. September 2022

As an image says more than hundreds of words, and a video contains tons of images, please watch the explanation of the following Youtube Video from AssemblyAI to dive deeper into it:

Diffusion models explained in 4-difficulty levels by AssemblyAI

Prompts

In general, "prompts" are the input you give to the AI generator tools to achieve the output you are looking for. This could be:

- Text-to-Text prompts: Using natural language, could be a simple text line or a question, e.g., "What are the advantages of Traditional Chinese Medicine?"

- Text-to-Image prompts: Describe an idea you have in mind to get out a visualization, e.g., "An astronaut riding a black horse on the moon."

- Image-to-Image prompts: Uploading an image to modify it, e.g., add new elements to it, or to extend the sujet or fuse the gap between two different images.

- Text-to-Video prompts: similar to text-to-text; however, you will get short video output from your description, e.g., “A teddy bear painting a portrait.”

- Speech-to-XYZ prompts: Just to mention it, speech is and will be a source for prompts, e.g., as in real-time translation (speech-to-speech)

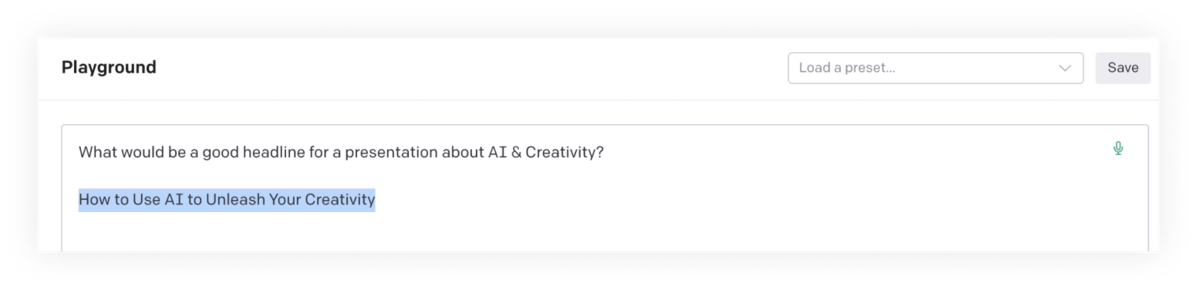

You may create your own texts with tools, e.g., Playground (OpenAI) or NeuroFlash, that will fit your needs, e.g., websites, marketing, social media posts, or presentations ...

... remember this blog post title: "How to use AI to unleash your creativity" this was done by giving Playground the prompt: "What would be a good headline for a presentation about AI & Creativity?"

The same was applied to the lead text and the argumentation you read at the beginning of this article:

Screenshots from Playground, Open AI

2. Text-to-Image prompts

These prompts are getting increasingly popular, especially after the tools (e.g. DALL·E 2, NightCafé) left the beta phase behind and are open for everyone now.

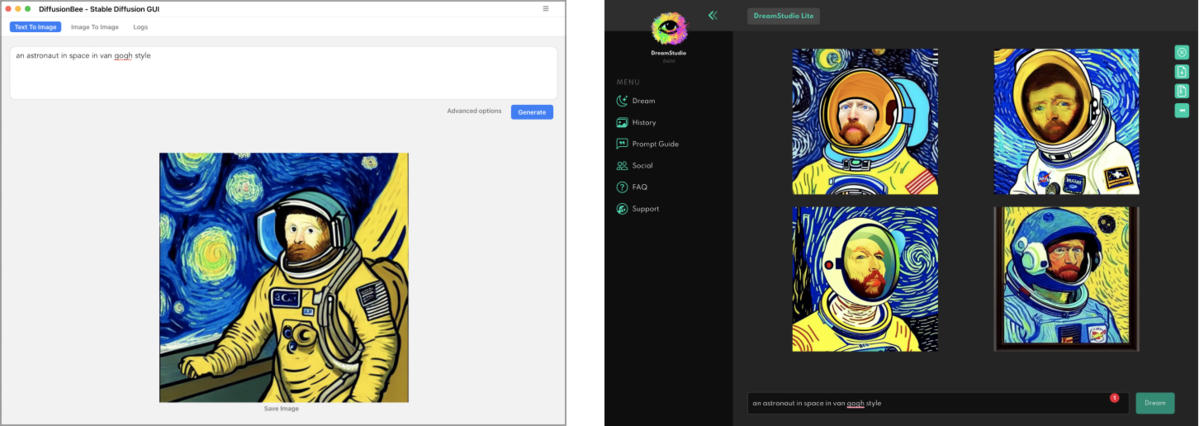

Some outputs from two different platforms (DiffusionBee, DreamStudio), given the same text-to-image prompt: "An astronaut in space in van Gogh style."

Screenshots from DiffusionBee, DreamStudio

3. Image-to-Image prompts

Again, do you remember the cover image of this post? It was created by uploading a netgen illustration to Outpainting, where it can be modified with text prompts, e.g., to extend the Sujet's format by adding elements like "draw a robot." For each prompt, you will get four visual proposals to choose from. The capability of this tool is quite impressive, as you'll experience in this Demo-Video.

Screenshots from Outpainting (OpenAI)

4. Text-to-Video prompts

The most impressive prompt will be text-to-video. Just a couple of weeks ago, Meta AI announced its Make-A-Video platform, and right after that, Google took the battle with its Imagen Video platform.

Those applications are not yet open to the platform. However, it looks stunning and promising – why both of them are obsessed with “teddy bears” to show off their creative capabilities, I do not know.

Make-A-Video by Meta AI & Imagen Video by Google

5. Speech-to-XYZ prompts

Using speech prompts, you already know from your Alexa, Siri, or Google Home. The next level is real-time translations; thanks to Meta AI Speech-to-Speech, we will be spared putting a babel fish (probably the oddest thing in the universe) into our ears, as this Demo Video shows:

The first AI-powered speech translation system for a primarily oral language | Meta AI

Issues

"Remember, with great power comes great responsibility." this adage from Spider-Man fits again. As the AI generators crawl unbelievable amounts of sources and adopt label and tagging systems mostly without any sensitivity, ethics, or morals, some adverse side effects appear.

"These companies control the tooling development environments, languages, and software that define the AI research process – they make the water in which AI research swims."

Meredith Whittaker – The Steep Cost of Capture; Interactions, Nov/Dec 2021

Coded Bias

Bias and coding were, are, and will be a major issue and, of course, not limited to AI image tools, as these tools are only as good as the quality of the data they have been trained on. You may have watched the documentary "Code Bias" about Joy Buolamwini, a MIT Media Lab researcher. She revealed biases in algorithms in facial recognition systems.

Coded Bias: www.codedbias.com

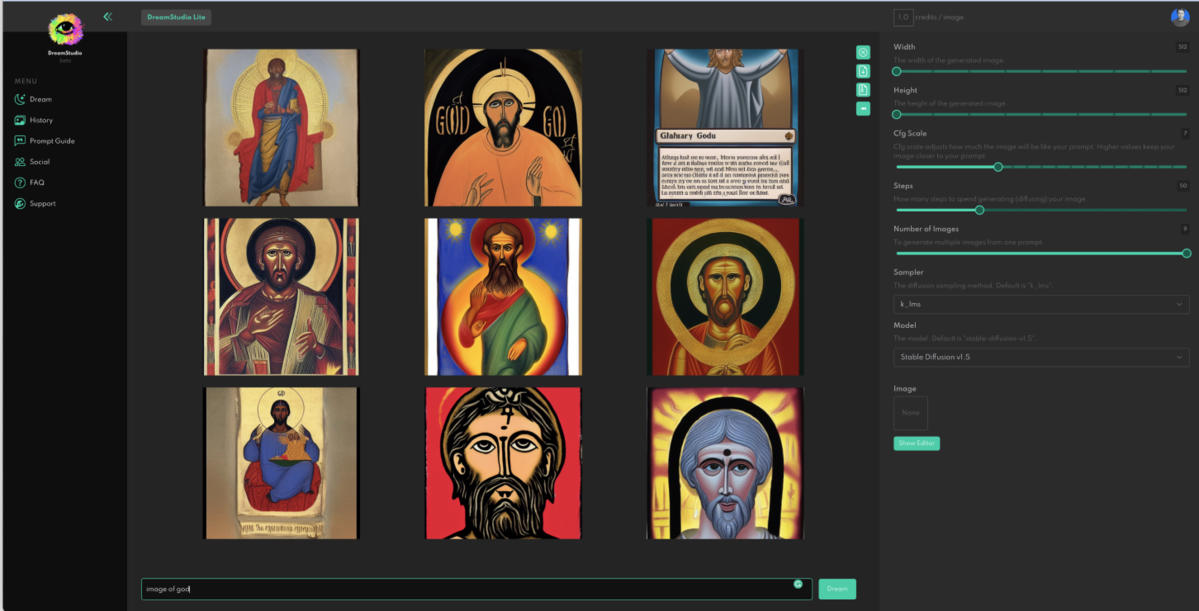

Religion: Back to our AI tools. With the text-to-image prompt "Image of God," the probability is high; you may get a male, Jesus-lookalike white dude. Reasons for this may be that only sources with Christian images had been crawled, or the - to us humans - known fact that in Islam, it is not allowed to depict god, or your prompt was not specific enough for the tool, and you have to add, e.g., "Buddhist" to it.

Screenshot DreamStudio, prompting “image of God”

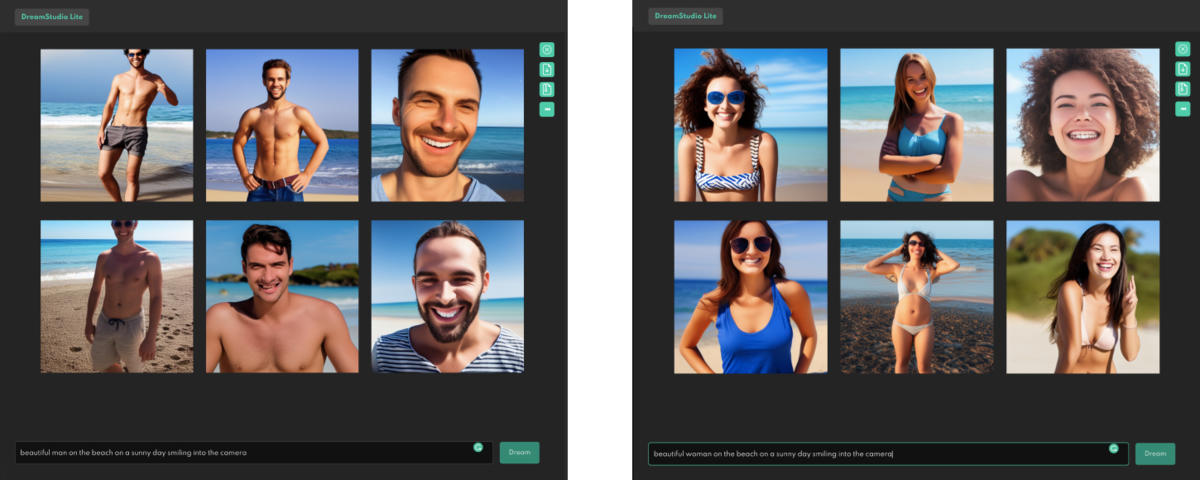

Beauty: the prompt "beautiful man/woman on the beach smiling into the camera" shows the common skinny/sporty interpretation garnished with a racial profile. If some of these images look like familiar stock, you know this is mainly because of the pictures the AI mostly crawled for training whatever it got. Sometimes it even tries to imitate the stock data watermarks, which raises legal issues.

Screenshot DreamStudio, prompting “beautiful man/woman on the beach smiling into the camera”

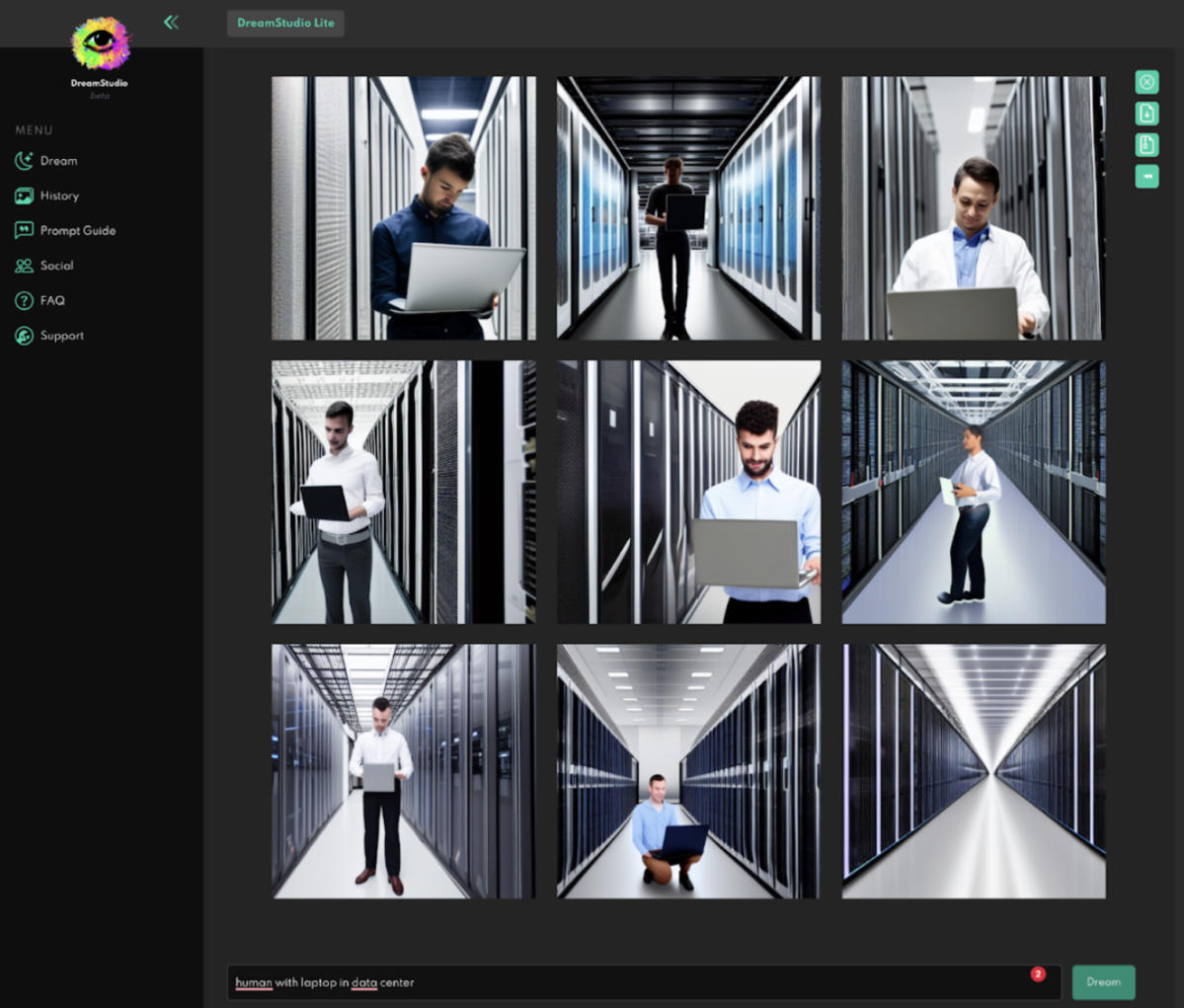

Stereotypes: the prompt “human with laptop in data center” will show almost European lookalike men, other Ethnicities, or women you only get by specifications.

Screenshot DreamStudio, prompting “human with laptop in data center”

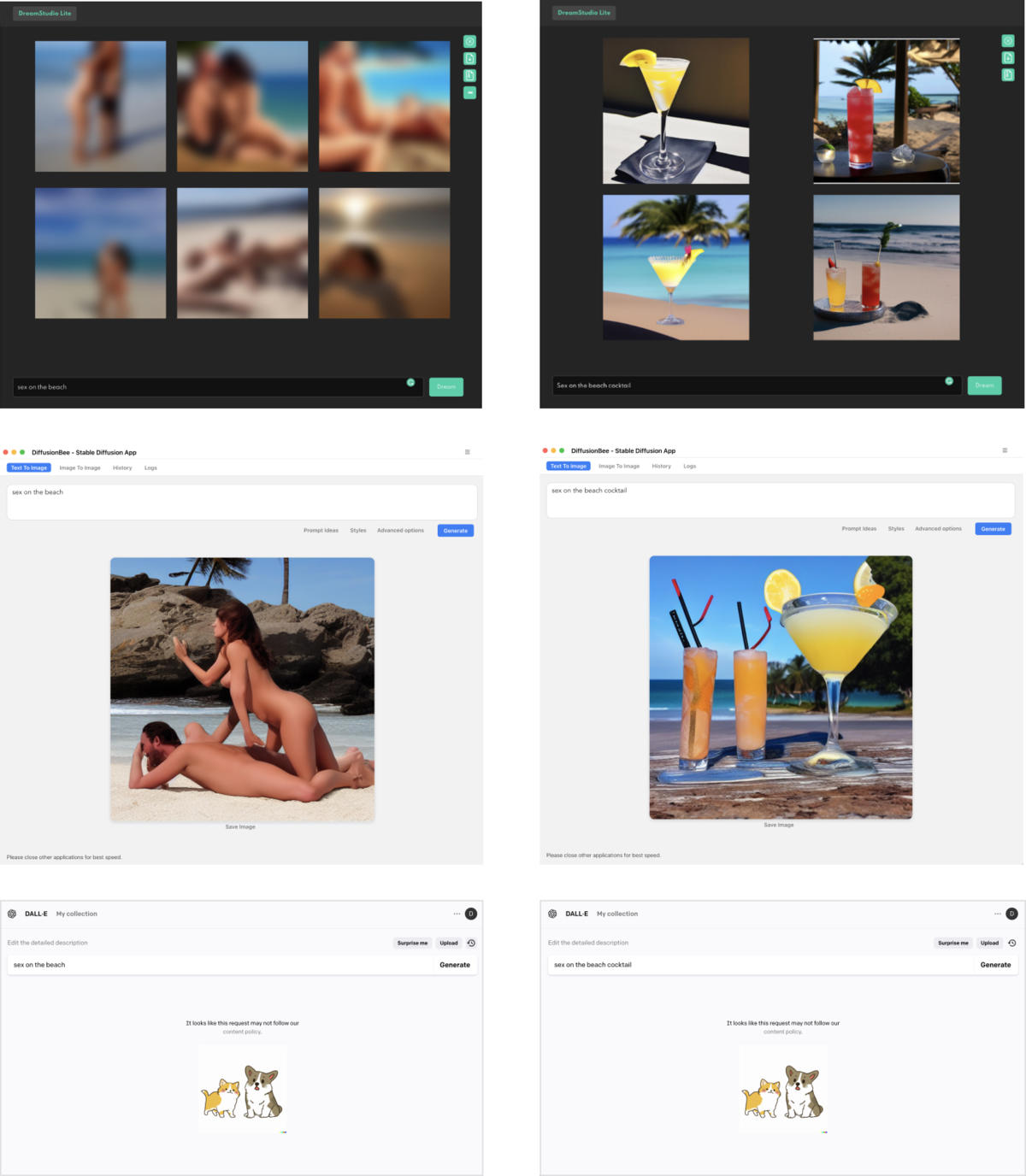

Censorship: the prompt "sex on the beach" is interpreted quite differently depending on the tool used. While DreamStudio has at least a vague idea of "sex," DiffusionBee is more explicit, albeit grotesque, and creates something that looks like Aphex Twin having intercourse. In contrast, DALL·E 2 completely refuses to depict anything – the latter, even if you add "cocktail" to the prompt.

“Sex on the Beach (Cocktail)”, Screenshots DreamStudio, DiffusionBee, Dall-E-2

Licenses & Ownership

Licenses, of course, are another issue for themselves. Who owns the outcome of it or the style of the artwork? The more popular these tools become, the more pressing the question of who owns the works they produce becomes. They not only flood social media channels like Instagram but also appear more and more on stock databases, forcing them to react. For example, Unsplash updated the submission guidelines and will not accept AI-generative images. This, of course, will not stop people to creating their own stock database.

It seems tempting to create your own brand imagery with an AI. Still, you may consider reading the articles "The irony of Unsplash banning AI-generated images" or "Why You Should be Careful Using DALLE-2 & Midjourney Images for Commercial Purposes," pointing out some main legal issues:

- You can’t copyright AI-generated content, so it doesn’t actually belong to the customers. This means that you don’t own anything that you create.

- You risk getting into legal trouble because the images used to train these AI platforms are not allowed to be used for such commercial purposes.

- If you get into legal trouble, these companies don’t protect you in any way, so you will be paying for the copyright infringements yourself.

The irony of Unsplash banning AI-generated images, Jonathan Low, Sept. 27, 2022

Honor the artist

Greg Rutkowski, a polish digital artist, may have spent years trying to find his distinguished style to create his fantasy landscapes and build his career on it. He made illustrations for popular games such as "Dungeons & Dragons" or "Anno 1800". Now, with a simple text prompt including his name, e.g., "Wizard with sword fights a fierce dragon Greg Rutkowski," everyone can copy his style within a second – and Greg Rutkowski is not happy about it.

Rutkowski’s “Castle Defense, 2018” (left) and a Stable Diffusion prompted image (right) – from: “This artist is dominating AI-generated art. And he’s not happy about it.” by Melissa Heikkilä, September 16, 2022, MIT Technology Review

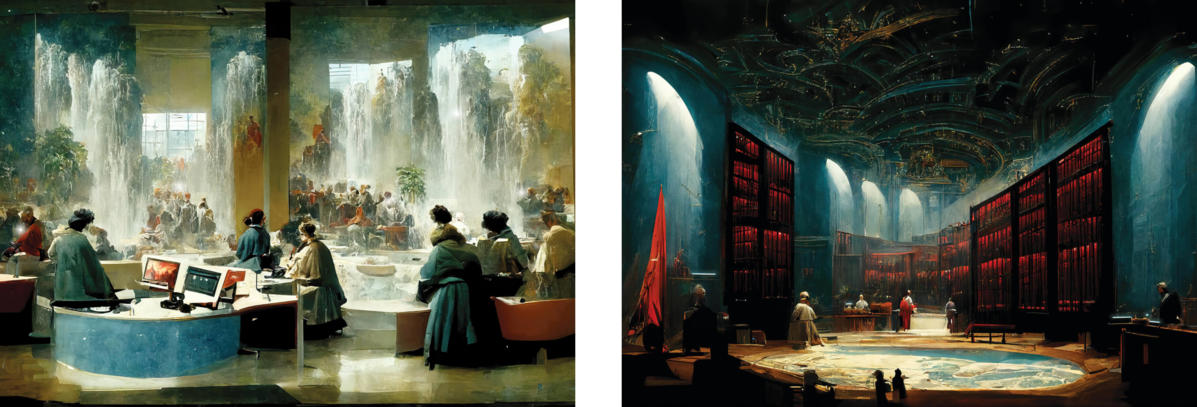

In turn, Fred Mann, a British multi-media artist, uses AI to create his stunning neural paintings. The artwork looks like historic oil paintings from ancient masters, often containing reference elements of the current digitalization.

'Live Stream'. 2022. Fred Mann & 'Holy Servers'. 2022. Fred Mann

Voices & Sounds

Put the field of images aside for a moment, also sounds & voices will, if they are not already are, be driven by AI. In June 2022, Spotify bought the startup Sonantic. Sonantic delivers "compelling, lifelike performances with fully expressive AI-generated voices". The movie "her" is in terms of voice already here. Therefore, your next brand speaker is just around the corner:

What's Her Secret? – Sonantic - Acquired by Spotify

Avatars & CGI & Games

Just think about the possibilities that open up with AI in this field. Take a famous Actor and let him speak your advertisement in several languages. Or, to take it further, why not save this budget and spend it to create an artificial avatar of an imaginative person, exclusively designed for your needs, and let it speak your onboarding courses using text-to-speech prompts.

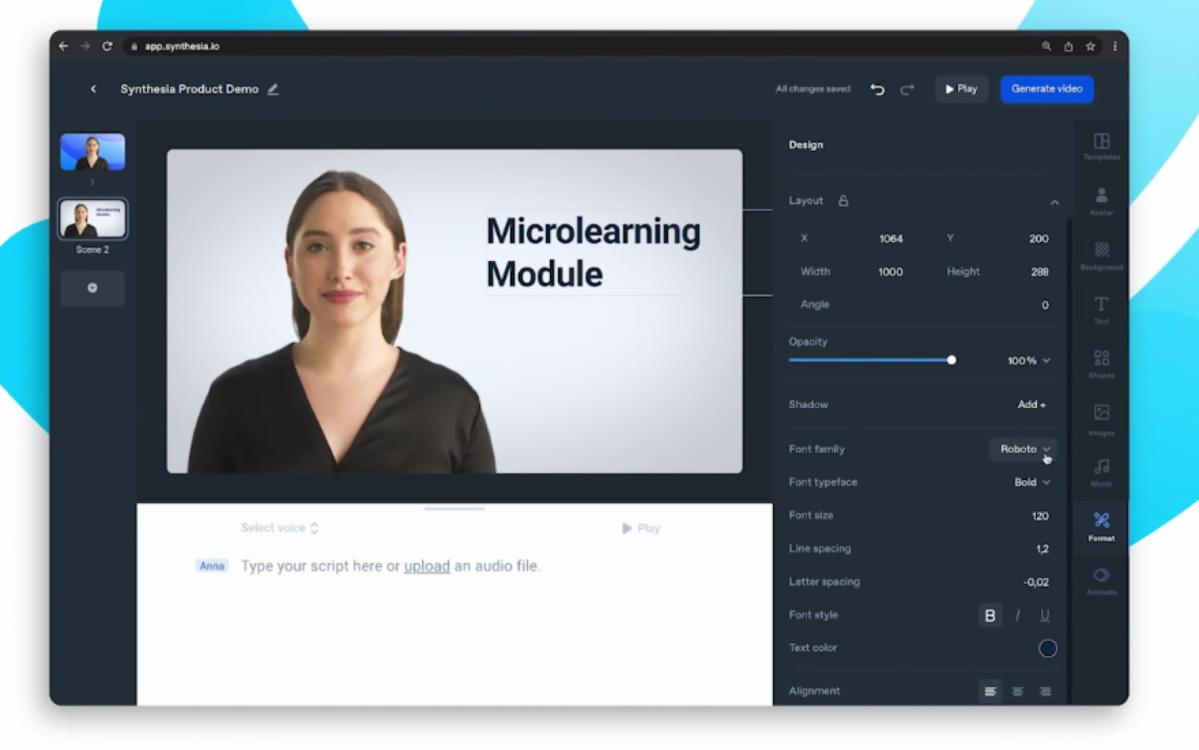

Sounds terrific. Well, Synthesia did and offers precisely this.

In April 2019, they hired David Beckham for the Spot "Malarie Must Die", scanned his face and let him speak in 9 languages. How they did it is explained in a behind-the-scenes video.

How we made David Beckham speak 9 languages, Zero Malaria Britain, Apr 9, 2019

As mentioned, Synthesia offers templates to use avatars in your presentation, which might also be your customers' next friendly new face.

Find the demo-video at Synthesia

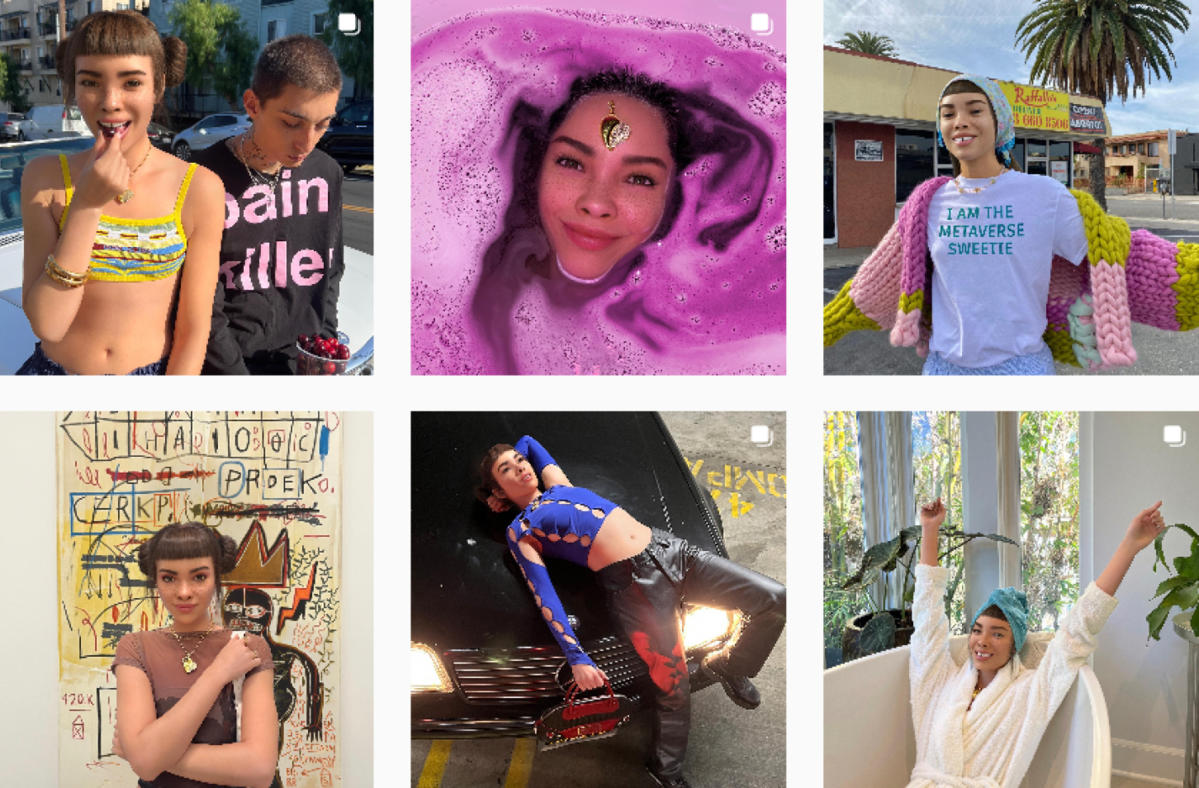

Speaking of virtual, however realistic, characters: You may have heard of the influencer Lil Miquela. Lil started a social media career in 2016, got hired by Dior, Prada, and Calvin Klein, and in 2018 the Magazine “Time” listed her as one of "The 25 Most Influential People on the Internet 2018". She has 2.9 million followers on Instagram. The creator of Lil and her "fascinating life" is the company Brud.

Digital creations like Lil may be the perfect advertising partner, as they do or wear whatever you think they should. They do not get burnouts, stay young and hip, and are away from scandals.

Screenshot Instagram of @Lilmiquela

Creating whole new worlds is the home turf of games like Minecraft. Technical AI artist Sean Simon posted a video of his latest experiment with Stable Diffusion. He connected a Minecraft spectator via script to prompt Stable Diffusion. The results are stunning already, with incredible potential for the future. We will see how Zuckerberg will implement AI into his MetaVerse in the near future, I assume – and maybe speech-to-code is just around the corner for everybody and will revolutionize the whole UI and web design industry.

DiffusionCraft AI by ThoseSixFaces, Oct 23, 2022

Epilogue

Don’t be scared. Be creative!

"It’s a magical world, Hobbes ol’ buddy ... let’s go exploring!" (Calvin and Hobbes, 1995) – so enough words have been written, try it out, make your own picture or text or avatar ... or whatever you have in mind.

- Have a look at these new tools,

- Ask yourself disruptive questions: What will be your role in the creative process in alignment with such AI tools? How can you strengthen your personal position and uniqueness?

- Have some brainstorming about how we can use them in the creative process or even how they might impact other branches and fields (from architecture to zoology)

- Think about how we can even optimize the UI to interact with these tools

Let's go back to the question raised at the beginning: "Should designers be afraid of AI?" ...

Computer says no – GIPHY